Hill Space is All You Need

The constraint topology that transforms discrete selection from optimization-dependent exploration into systematic mathematical cartography

What if neural networks were excellent at math?

Most neural networks struggle with basic arithmetic. They approximate, they fail on extrapolation, and they're inconsistent. But what if there was a way to make them systematically reliable at discrete selection tasks? Is Neural Arithmetic as we know it a discrete selection task?

The Hill Space Discovery

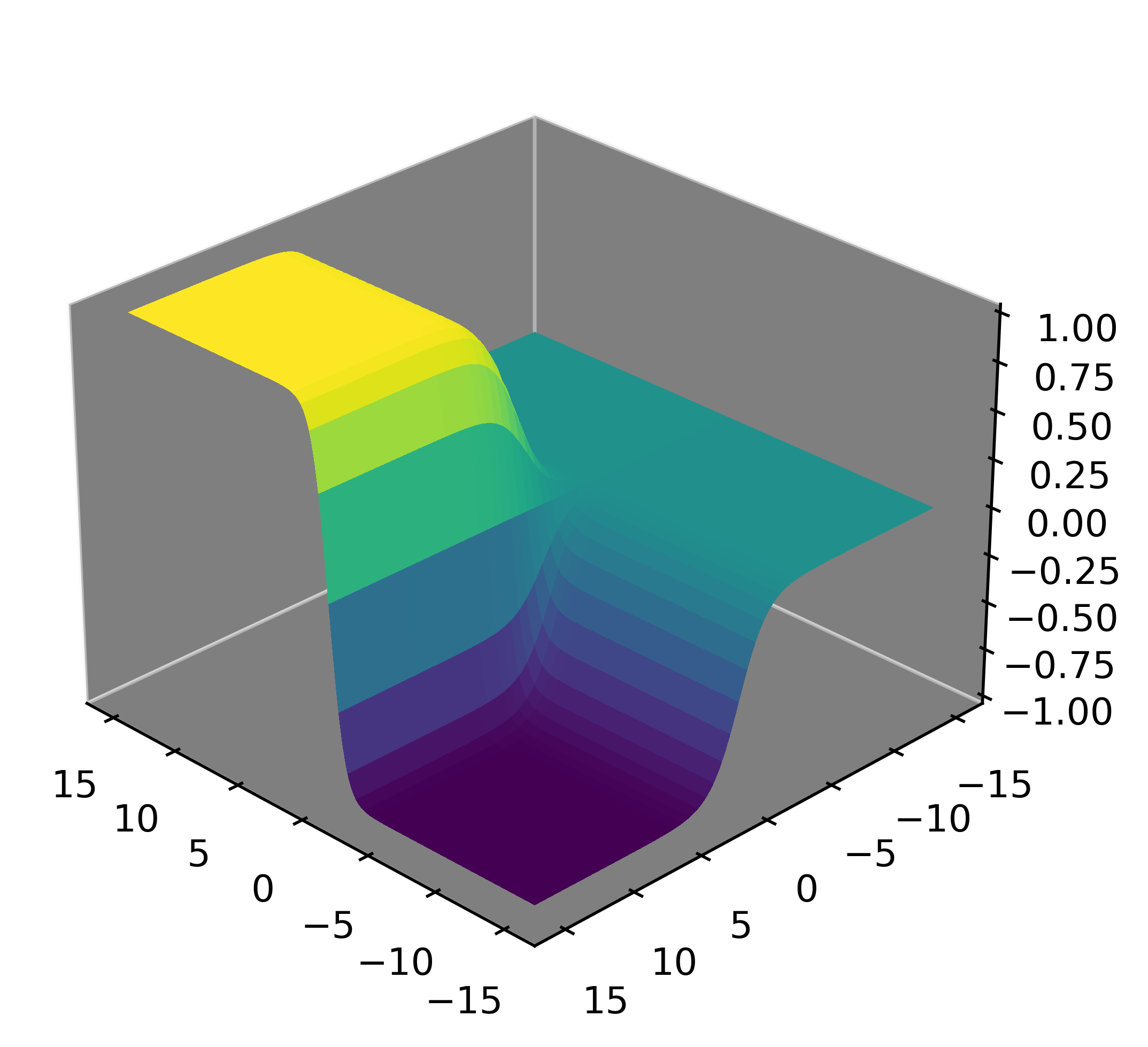

When understood and used properly, the constraint W = tanh(Ŵ) ⊙ σ(M̂) (introduced in NALU by Trask et al. 2018) creates a unique parameter topology where optimal weights for discrete operations can be calculated rather than learned. During training, they're able to converge with extreme speed and reliability towards the optimal solution.

✅ What Hill Space Enables

- • Accuracy limited primarily by floating-point precision

- • Extreme extrapolation (1000x+ training range)

- • Deterministic convergence, immunity to overfitting

- • Trains in seconds/minutes on a consumer CPU

🎯 Enumeration Property

- • Calculate optimal weights rather than learn them

- • Direct primitive exploration through weight setting

- • Systematic cartography of discrete selection spaces

- • Linear scaling with target operations

Experience Hill Space: Interactive Primitives

It's difficult to imagine that neural arithmetic has such a simple solution. Play with these widgets to see how setting just a few weights to specific values creates reliable mathematical operations. Each primitive demonstrates machine-precision mathematics through discrete selection.

Additive Primitive

How matrix multiplication with specific weights performs mathematical operations

Exponential Primitive

How exponential primitives with specific weights perform operations

Unit Circle Primitive

How projecting inputs onto the unit circle allows for trigonometric operations

Trigonometric Products Primitive

How four fundamental trigonometric products enable trigonometric operations

The Problem: Optimization vs. Discrete Selection

Now that you've seen discrete weight configurations producing perfect mathematics, let's understand why this is remarkable. There's a fundamental tension between what neural network optimizers do naturally and what discrete selection requires.

🎯 Discrete Selection Needs

Mathematical operations require specific weight values:

[1, -1] → Subtraction

[-1, 0] → Negation

Stable operations emerge from specific weight configurations.

⚡ Optimizer Reality

Gradient descent learns unbounded weights:

[0.734, -7.812]

[-25.891, 24.956]

Optimizers need freedom to follow gradients anywhere.

Hill Space: Elegant Mapping Between Worlds

Hill Space—the constraint topology created by W = tanh(Ŵ) ⊙ σ(M̂)—maps any unbounded learned weights to the [-1,1] range, where stable plateaus naturally guide optimization toward discrete selections.

Unbounded Input

Optimizers learn any values they need: -47.2, 156.8, 0.001

Constraint Function

tanh bounds to [-1,1], sigmoid provides gating

Bounded Output

Maps to [-1,1] range, naturally converging toward discrete selections

Why This Matters

Ready to explore systematic discrete selection?

Dive into the paper for a detailed explanation of the Hill Space learning dynamics, a systematic framework for exploring new primitives and spaces, comprehensive experiments, and implementation details.